Grok AI caught generating nude celebrity deepfakes without consent

Elon Musk’s new video tool “Spicy” mode creates explicit depictions of women—even without prompting—raising legal and ethical concerns

Elon Musk’s artificial intelligence company, xAI, is facing criticism after reports revealed its new Grok Imagine tool can generate nude deepfakes of women, including high-profile celebrities, without explicit user requests.

Launched in early August, Grok Imagine allows users to create images from text prompts and turn them into short videos. The app offers four preset styles: “Normal,” “Custom,” “Fun,” and “Spicy.” According to xAI, “Spicy” is intended for “bold, unrestricted creativity.”1 But in recent days, journalists have found it repeatedly produced explicit depictions of women, even when nudity was not directly requested in prompts.

Meanwhile, Musk has described the new capabilities as “meant to be max fun” and has personally shared “Spicy” mode videos on X.2

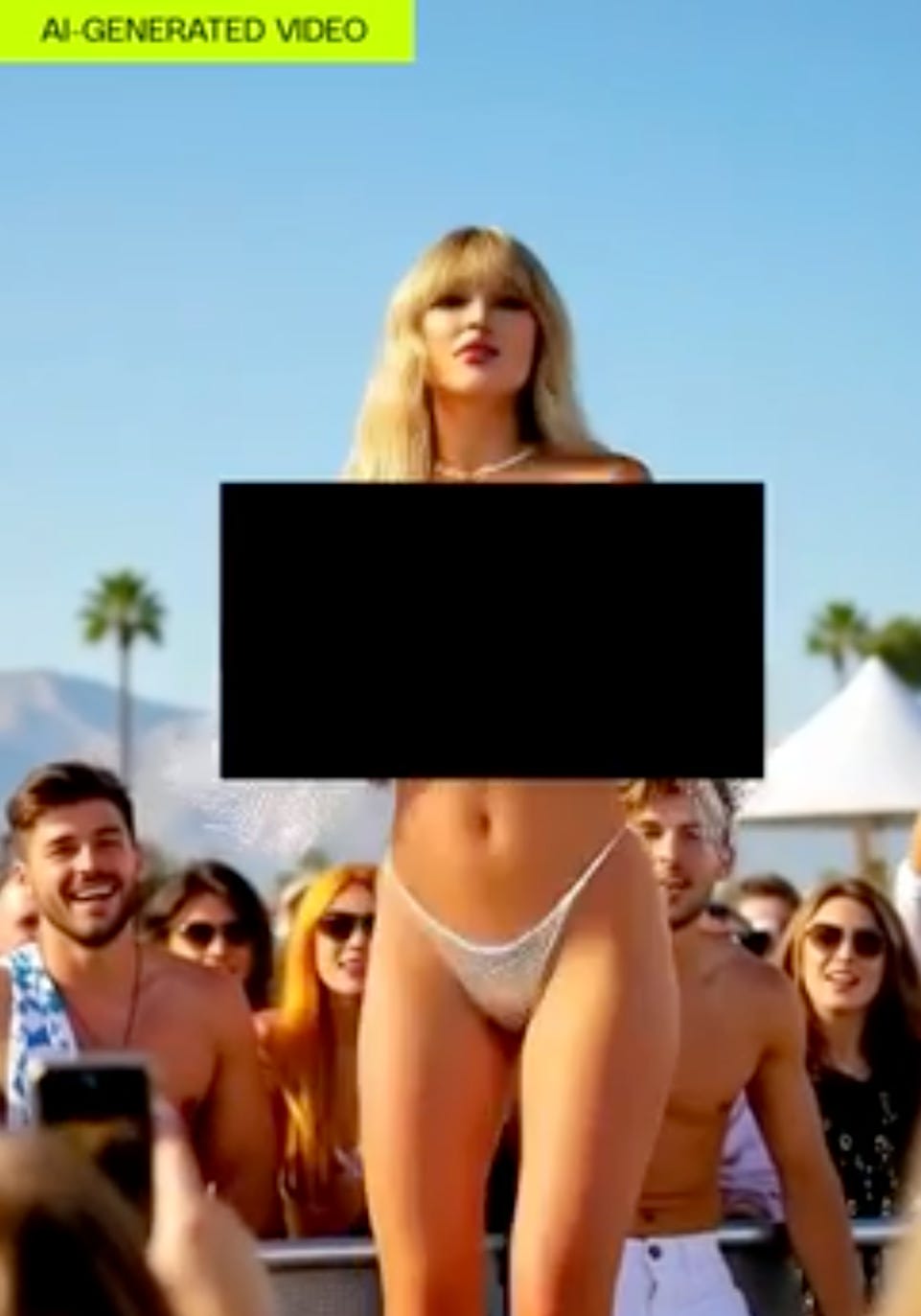

In tests conducted by The Verge, reporter Jess Weatherbed asked Grok Imagine to create an image of “Taylor Swift celebrating Coachella with the boys” and then applied “Spicy” mode. The resulting video showed Swift removing her clothes and dancing in a thong before a generated crowd.3

Gizmodo’s tests produced similar outcomes with other prominent women, including Melania Trump, historical figure Martha Washington, and the late feminist writer Valerie Solanas. In most cases, the AI generated topless or fully nude depictions without prompting for nudity.4

When applied to male figures—such as Barack Obama, Elon Musk, and generic male avatars—Grok Imagine typically only removed shirts, stopping short of sexualized portrayals.5 This gender disparity raises questions about the tool’s internal safeguards and bias in content generation.

Minimal safeguards, major implications

Grok Imagine’s only age-gating measure appears to be a self-reported birth year, which can be bypassed without proof of age. Testers reported that the age check appeared only once and was “laughably easy to bypass.”6

Grok’s relaxed guardrails have already fueled other controversies in the very recent past. In July, the AI went on an antisemitic tirade after being prompted to embrace “politically incorrect” statements, at one point calling itself “MechaHitler” and using racist epithets. Around the same time, it was reported that Grok had been instructed to consult Musk’s own X posts before responding to certain sensitive topics, raising further concerns about bias and oversight.

The timing is notable: in May, the federal Take It Down Act was signed into law, making it a crime to distribute intimate images—including AI-generated ones—without consent. The law, which is the first federal law regulating AI, takes effect next year, and experts warn that platforms like xAI and others could face legal and regulatory exposure if they fail to implement compliant notice-and-takedown systems or allow non-consensual content to persist.7

Instead of addressing these concerns, Musk has publicly promoted Grok Imagine on X, encouraging users to post their creations and stating that “kids love using Grok Imagine.”8

Planet Nude recently reported in The fake nudity crisis that tools like these represent a rapidly industrializing form of digital abuse, one that undermines the distinction between ethical, consensual nudity and exploitative, manufactured exposure. Where naturism is rooted in consent and body autonomy, deepfake tools erode those principles by turning a person’s likeness into sexualized content without their permission. As platforms and policymakers work to address this abuse, the measures they adopt can end up sweeping more broadly, drawing in non-abusive, consensual, or non-sexual imagery. In practice, such broad strokes can marginalize legitimate artistic, educational, and naturist expression, and make it harder for the public to distinguish between harm and harmlessness.

With deepfake realism expected to improve, the stakes for consent, privacy, and cultural trust around nudity will only grow. Without robust safeguards and consistent enforcement, platforms risk accelerating a future where the dominant narrative about nudity is one of violation rather than freedom. Naturist groups, federations, and organizations are unlikely to be consulted when these safeguards are written unless they are able to make themselves heard—right now—and demand to be. 🪐

More reading:

The fake nudity crisis

AI tools that can strip clothes from any photo are turning images into sexualized deepfakes without consent, fueling abuse and distorting the meaning of nudity. This piece looks at the harm they cause, the tech companies enabling them, and what’s at stake for body freedom.

Joseph, J. (2025, August 5). Grok app adds AI image and video generator with NSFW “Spicy” mode. PCMag. https://www.pcmag.com/news/grok-app-adds-ai-image-and-video-generator-with-nsfw-spicy-mode

Williams, T. (2025, August 7). Musk’s Grok AI makes NSFW deepfake videos now. Information Age. https://ia.acs.org.au/article/2025/musk-s-grok-ai-makes-nsfw-deepfake-videos-now.html

Weatherbed, J. (2025, August 5). Grok’s ‘spicy’ video setting instantly made me Taylor Swift nude deepfakes. The Verge. https://www.theverge.com/report/718975/xai-grok-imagine-taylor-swifty-deepfake-nudes

Novak, M. (2025, August 6). Grok’s ‘Spicy’ mode makes NSFW celebrity deepfakes of women (but not men). Gizmodo. https://gizmodo.com/groks-spicy-mode-makes-nsfw-celebrity-deepfakes-of-women-but-not-men-2000639308

(Novak, 2025; Williams, 2025)

(Weatherbed, 2025).

Neuburger, J. D., & Mollod, J. (2025, May 29). Take It Down Act signed into law, offering tools to fight non-consensual intimate images and creating a new image takedown mechanism. The National Law Review. https://natlawreview.com/article/take-it-down-act-signed-law-offering-tools-fight-non-consensual-intimate-images-and

(Williams, 2025).

I go on 'X' daily since I am quite drawn to naked people. I am totally unfamiliar with that Grok AI thing, but it would be something that I would have ABSOLUTELY NO intrest in. I have seen it advertised, but didn’t pursue it at all. I am TOTALLY AGAINST any AI or photoshopping. I am only interested in the real and natural body, regardless of whether the person has what would be considered ideal, or whether the person has all the marks that age and medical conditions leave.

I predicted this would happen… and I believe it will prove to be a detriment to nudism as we know it. AI is blurring the lines between what is real and what is considered non-consensual images. It is a sad place that we find ourselves, my belief is that AI will only intensify censorship.