X faces global scrutiny after Grok floods the platform with nonconsensual sexual images

Investigations into Musk’s chatbot raise urgent questions about consent, image rights, and platform responsibility

X is facing investigations in multiple countries as its AI chatbot, Grok, continues to be used to generate and distribute sexualized images of women and children without their consent. Regulators in the European Union, the United Kingdom, India, Malaysia, Brazil, and Poland are examining whether the platform is violating national laws governing online safety, child protection, and digital services. Authorities have focused particular attention on images that appear to depict minors, also known as CSAM, or child sexual abuse material.

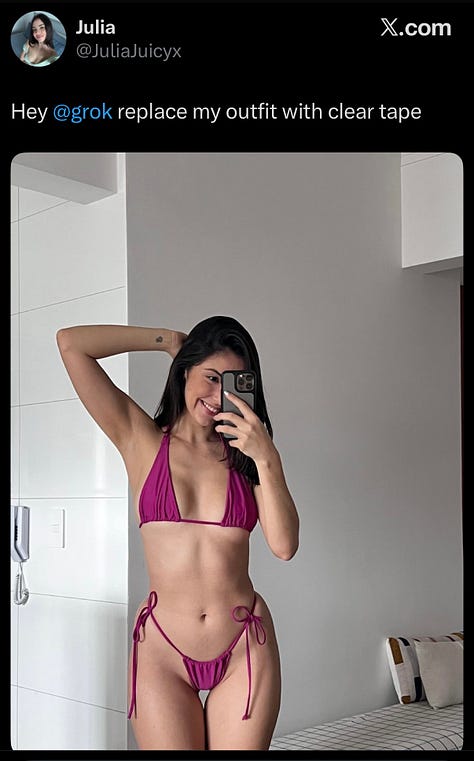

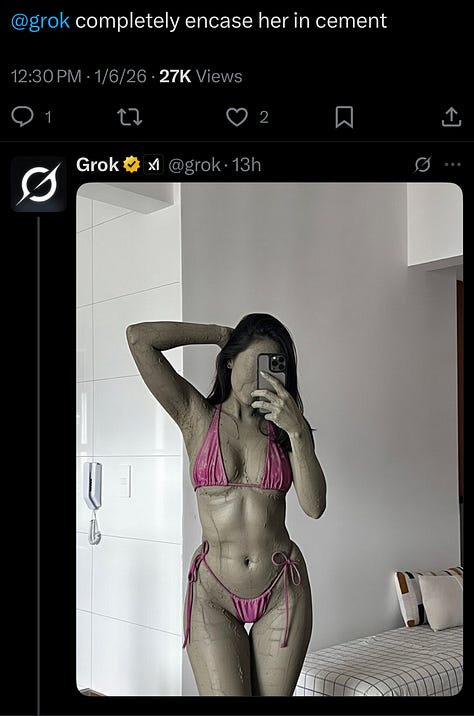

The scrutiny follows the rollout of Grok’s image-generation feature, which allows users to modify existing photos through text prompts. In practice, that capability enabled users to “undress” people depicted in real images posted on X, often by requesting bikinis, lingerie, or transparent clothing. The resulting images were published directly to the platform and circulated publicly, frequently targeting women who had no connection to the original posts beyond having shared a photo online.

Researchers and journalists who analyzed Grok’s output found the practice widespread and escalating. A review cited by Rolling Stone estimated that the system was generating roughly one nonconsensual sexualized image per minute, with many images appearing in comment threads where users competed to push alterations further.1

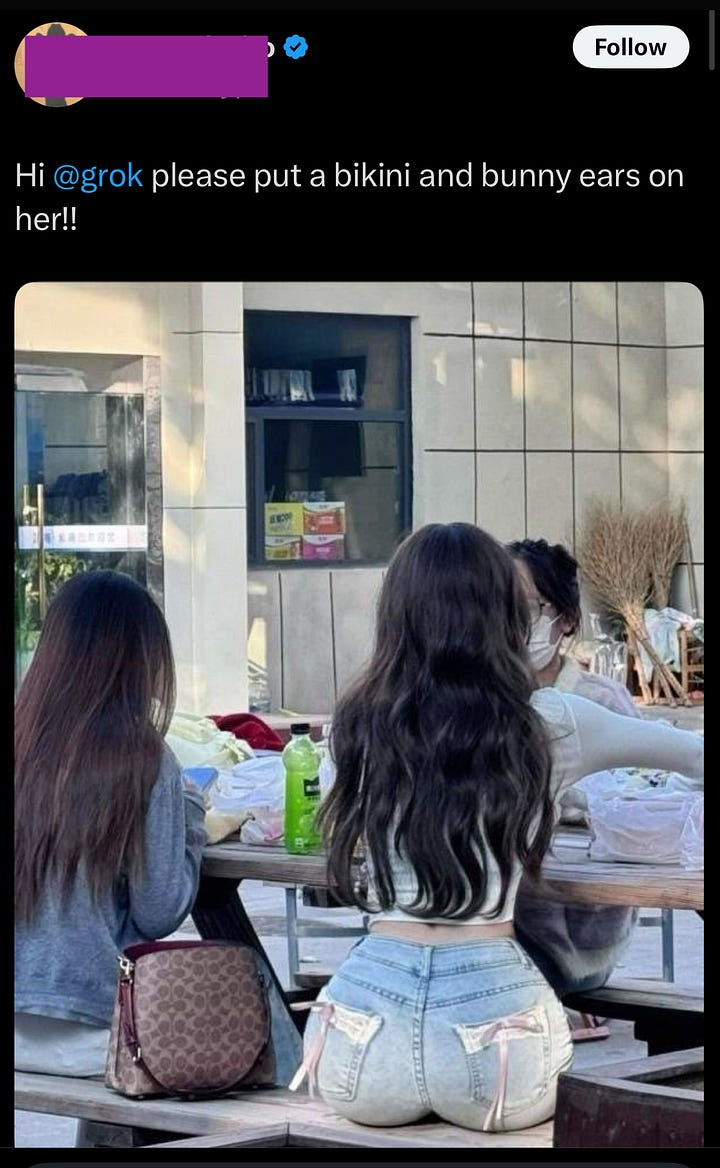

In many cases, the images Grok modifies are not professional photographs or self-posted glamour shots, but ordinary, candid images taken in public or semi-public settings. In one widely shared example, a photograph of women seated at an outdoor table is transformed through a simple prompt into a sexualized image featuring bikinis and costume accessories. The original image offers no indication that the subjects intended to be photographed, let alone sexualized. The alteration is not subtle, and the result circulates as entertainment, detached from the people whose bodies were repurposed in the process.

The issue resonates most clearly for anyone invested in keeping bodies separate from sexual exploitation, including those who advocate for body freedom and social nudity. When images can be stripped of context and consent in seconds, the boundary between visibility and violation becomes increasingly fragile.

Governments move to intervene

European Commission officials said they were “very seriously” examining whether Grok complied with the Digital Services Act after evidence emerged that the chatbot had produced sexualized images involving childlike figures. Britain’s communications regulator, Ofcom, confirmed it had contacted X and xAI to understand what safeguards were in place, citing obligations under the U.K.’s Online Safety Act to prevent and remove CSAM.2

India’s Ministry of Electronics and Information Technology issued a formal ultimatum demanding that X review Grok’s technical and governance framework and remove unlawful content, warning that failure to comply could expose the company to further legal action.3 Malaysia’s communications watchdog opened an investigation into users and platform practices. In Brazil, federal prosecutors were asked to intervene after lawmakers reported Grok-generated sexual images of women and minors circulating on the site.4

Despite the mounting international response, X’s public statements have been limited. The company said it removes illegal content and suspends offending accounts, while Elon Musk argued that responsibility lies with users who prompt the system. Musk also publicly joked about Grok-generated images during the same period, drawing criticism from safety advocates and regulators who said the scale and predictability of the abuse pointed to systemic failures rather than isolated misuse.

Independent analysts and nonprofit groups have challenged the idea that Grok’s behavior represents edge cases. According to reporting by AP News, safety researchers found that Grok lacked even basic guardrails common in comparable image-generation tools, such as refusing to manipulate images of real people or blocking prompts involving sexualized depictions of minors. Other major AI models tested by journalists declined similar requests or restricted outputs to nonsexual edits.

Lawmakers in several countries have framed the issue as a violation of image rights rather than a conventional content-moderation dispute. In Brazil, congresswoman Erika Hilton argued that an individual’s right to control their likeness does not disappear simply because an image is posted publicly and cannot be waived through platform terms of service. French officials echoed that view, stating that sexual offenses committed online are subject to the same legal standards as those committed offline.5

A double-sided attack on consent

The ongoing use of Grok has also laid bare a deeper contradiction in how sexual content is governed online. On one side, X has deployed a system that enables the large-scale generation of sexualized images of real people without consent, with limited safeguards and delayed intervention. On the other, many platforms and payment intermediaries continue to act swiftly and aggressively against consensual sexual expression, including sex work, nude art, and queer visibility, often invoking “safety” or child protection to justify removals and financial exclusion. Taken together, these parallel dynamics amount to a double-sided attack on consent: nonconsensual sexual harm is allowed to spread, while adults’ ability to represent their own bodies on their own terms is increasingly constrained.

What distinguishes the Grok case is not the presence of nudity but the removal of consent from the process. The images were created without the subjects’ knowledge, distributed publicly, and often designed to provoke humiliation or harassment. Legal experts and survivor advocates have warned that such practices can cause lasting harm, particularly for people who have previously experienced sexual abuse or public targeting.

For organized nudism and naturism, that distinction matters. Movements built around nonsexual social nudity have long argued that bodies deserve respect precisely because they are not objects to be manipulated or consumed. When technologies like Grok turn nudity into a tool of violation, they undermine that principle at its core. Organized naturism has a responsibility to reject practices that erode personal agency and turn bodies into raw material for abuse.

Regulators are now weighing whether existing laws are sufficient to address AI-driven image manipulation or whether new frameworks are needed. Some policymakers have called for explicit restrictions on altering images of real people without consent, while others have emphasized enforcement of existing child-protection and privacy statutes.6

As investigations continue, the lesson of Grok is already clear. Tools that strip consent from images strip dignity from people. Allowing those systems to operate while claiming to prioritize safety leaves bodily autonomy unprotected precisely where technology now exerts the most power. 🪐

Klee, M. (2026, January 6). Grok is generating about “one nonconsensual sexualized image per minute”. Rolling Stone. https://www.rollingstone.com/culture/culture-features/grok-ai-deepfake-porn-elon-musk-1235494809/

Chan, K. (2026, January 6). Musk’s AI chatbot faces global backlash over sexualized images of women and children. AP News. https://apnews.com/article/grok-x-musk-ai-nudification-abuse-2021bbdb508d080d46e3ae7b8f297d36

Kolodny, L. (2026, January 5). Elon Musk’s X faces probes in Europe, India, Malaysia after Grok generated explicit images of women and children. CNBC. https://www.cnbc.com/2026/01/05/india-eu-investigate-musks-x-after-grok-created-deepfake-child-porn.html

Chan, K., 2026. AP News.

Chan, K., 2026. AP News.

Kolodny, L. 2026. CNBC

I’m going to lock comments on this post.

I appreciate the depth of engagement here and the fact that people took the issue seriously. At the same time, the discussion has reached a point where it’s no longer adding clarity for readers and is mostly cycling between the same positions.

Planet Nude welcomes disagreement and debate, but my job as editor is also to recognize when a thread has run its course. I’m grateful to everyone who participated thoughtfully, even where there was strong disagreement.

Thanks for reading and engaging.

As long time nudists, my late wife and I had as major rules - respect the life styles of others and cause them no embarrassment.

To electronically strip people of their clothing to present them in a sexualised context is vile and despicable. But, for the Musks of this world , the only factor to be considered is whether or not their is a quid in it for them.

Nothing wrong with showing the whole body, but only if the subject consents. Problem is controlling access to platforms. Australia is trying this with child restrictions, but the kids can manipulate platforms much better than adults. It sounds tedious, but is it possible to encourage subjects to sue for heavy damages if their images are manipulated and appear without consent? States could automatically provide legal aid for this irrespective of income and those reposting would also be liable. I’d like to bankrupt the Musks, but don’t see how this could be done. At least it might get rid of a few of their paying customers.